Behind the Rack: How Tubbo Built a Free Minecraft Hosting Service

What happens when a Minecraft YouTuber turns a homelab into a hosting company? Tubbo’s PlayHosting is chaotic, clever, and surprisingly functional.

Not all homelab owners get to turn their passion into a career. For Tubbo, a popular Minecraft YouTuber and content creator, a growing interest in infrastructure evolved into PlayHosting, a free Minecraft server hosting platform designed to support his community and beyond. What started as a side project has rapidly matured into a functional hosting service, backed by custom hardware, donated equipment, and a surprising amount of engineering.

In this article, we explore the infrastructure behind PlayHosting, from servers and networking to control panels and scaling challenges, to understand what it takes to build and maintain a hosting company in your own office.

The Mission

Tubbo’s journey into hosting began like many homelab stories: a personal need that spiraled into something much bigger. Initially hoping to run a few Minecraft servers for friends, he quickly realized he could use his platform to offer something more ambitious. Troubled by the inflated prices and limitations of modern game hosting, he launched PlayHosting with the goal of providing free Minecraft servers to anyone, especially community members who might not have the means or technical knowledge to run one themselves. In early Reddit posts, he described a desire to repurpose existing hardware, work with local ISPs for bandwidth, and create a worker-owned platform sustained by cooperative effort, donations, and advertising.

To bring that vision to life, Tubbo partnered with Lilypad, forming the foundation of what would become PlayHosting. What began as a homelab experiment quickly evolved into a functioning platform with real infrastructure, custom code, and tens of thousands of hosted instances. PlayHosting offers free Minecraft server hosting with minimal restrictions, allowing players to upload their own files, open ports for features like voice chat, and avoid the forced shutdowns or AFK kick policies seen on other platforms. The project is financially sustained through a mix of Google AdSense revenue, referral partnerships, and donated hardware from sponsors seeking visibility in Tubbo’s content. Bandwidth is largely covered through Tubbo’s existing streaming business, leaving only power bills to be paid by the project itself. While there are no formal paid plans, premium features may eventually be offered to top supporters. Ultimately, PlayHosting reflects a hybrid of community-driven service and content-backed funding, enabled by Tubbo’s unique position in both tech and streaming communities.

The Infrastructure

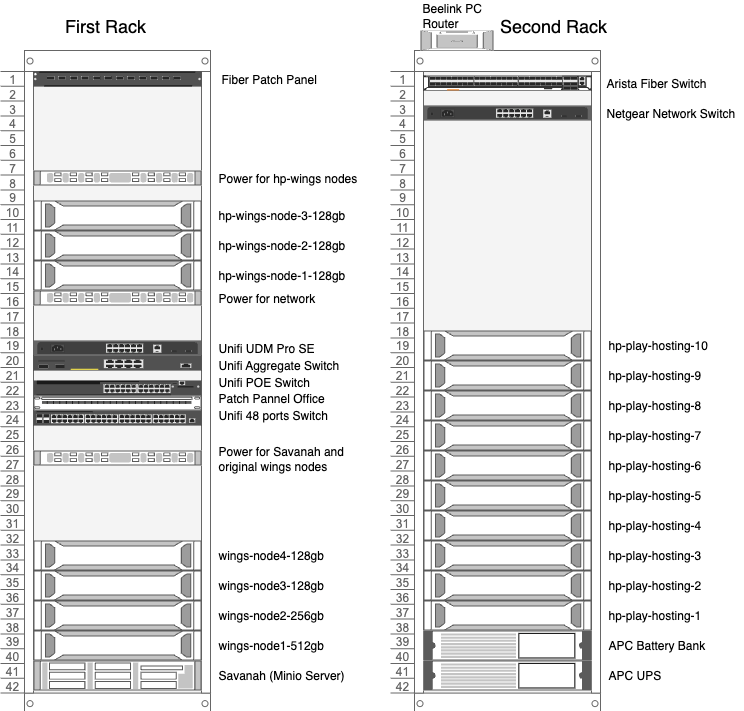

At the heart of PlayHosting is a growing collection of rack-mounted servers housed in Tubbo’s office space. What started as a single homelab rack has expanded into a multi-node environment, carefully assembled from a mix of enterprise-grade and consumer components. The first generation of hosting nodes, labeled Wings-[1–4], were built using Supermicro H11DSI motherboards and AMD EPYC 7551 processors. Wings-1 and Wings-2 feature dual CPUs and high memory capacity (512 GB and 256 GB respectively), while Wings-3 and Wings-4 are single-socket builds due to an ordering mishap involving the single-CPU EPYC 7551P. All nodes run Proxmox as the base hypervisor to simplify remote management, snapshotting, and resource allocation. Virtual machines are standardized on Debian 12, offering a clean and stable environment for each Wings instance. Wings-1 also hosts the main Pterodactyl dashboard, making it the most sensitive node in the cluster and the only one not overcommitted on RAM to avoid downtime.

Supporting these nodes is a dedicated storage server named Savannah, built around a Supermicro H11SLi motherboard and a chassis with 12 drive bays. Eleven bays are populated with 18 TB drives in a RAID 5 configuration, with the twelfth used for the system boot disk. While the choice of RAID 5 over a more redundant configuration raises eyebrows given the size of the array, the system is primarily used for object storage and backups, not live data. MinIO runs on Savannah, exposing a simple S3-compatible interface that integrates directly with PlayHosting’s backup and restore pipeline. This object storage layer powers the platform’s “Limbo” system, allowing servers to be suspended, backed up, and unloaded from memory without losing data.

To better support Minecraft’s demanding single-thread performance needs, Tubbo introduced a second generation of hosting nodes using the hp- prefix, short for High Performance. These systems are built around the AMD Ryzen 9 7950X, chosen for its high clock speed and strong price-to-performance ratio—ideal for game servers where world generation and block updates are heavily CPU-bound. Each server uses a Gigabyte B650M-K motherboard, two Crucial NVMe drives in mirrored RAID, and 128 GB of DDR5 memory. Servers are loaded with as much memory as possible, as it represents the main bottleneck in running Minecraft servers. The nodes are housed in 2U Irwin cases and powered by Corsair PSUs. The first batch, labeled hp-wings-[1–3], resides in the original rack and was deployed alongside the launch of the service. A second wave of ten newer servers, labeled hp-play-hosting-[1–10], was installed in a new rack. These are activated gradually, either to increase capacity or serve specialized client needs. Although the platform is built to scale, every new server adds to the operating cost, making growth a careful balance of user demand, available infrastructure, and financial sustainability.

The Network

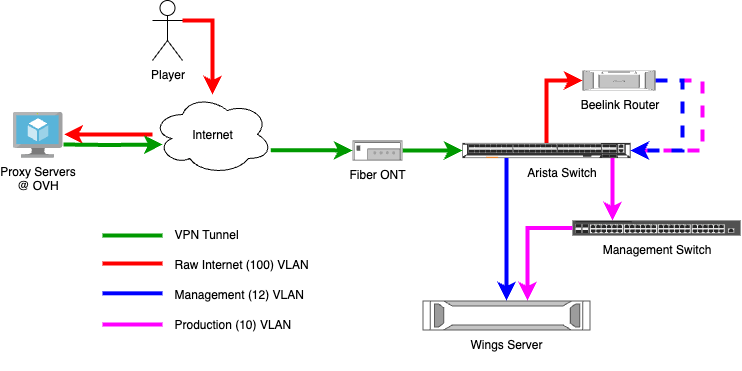

Before any traffic reaches PlayHosting’s internal network, it first passes through a layer of cloud-hosted proxy servers. These reverse proxies are hosted at OVH, a major European cloud provider known for its robust DDoS protection and high-throughput capacity. A reverse IP search of one of PlayHosting’s active nodes confirms its location in an OVH UK block, suggesting that Tubbo uses these nodes to terminate public-facing traffic before tunneling it back to the office data center. This design offloads key responsibilities including Layer 7 filtering, IP allocation, and NAT, from the local infrastructure, dramatically reducing the risk of service interruptions due to direct attacks. OVH’s infrastructure handles volumetric DDoS attacks at the network level, while application-layer filtering is managed by custom rules deployed on the proxy servers themselves. These rules limit each IP to a maximum of five subsequent connections, automatically dropping abusive traffic and mitigating threats like Slowloris before they reach the internal network. It’s a practical and cost-effective solution, allowing PlayHosting to offer free hosting to thousands of users without exposing its internal network to constant risk.

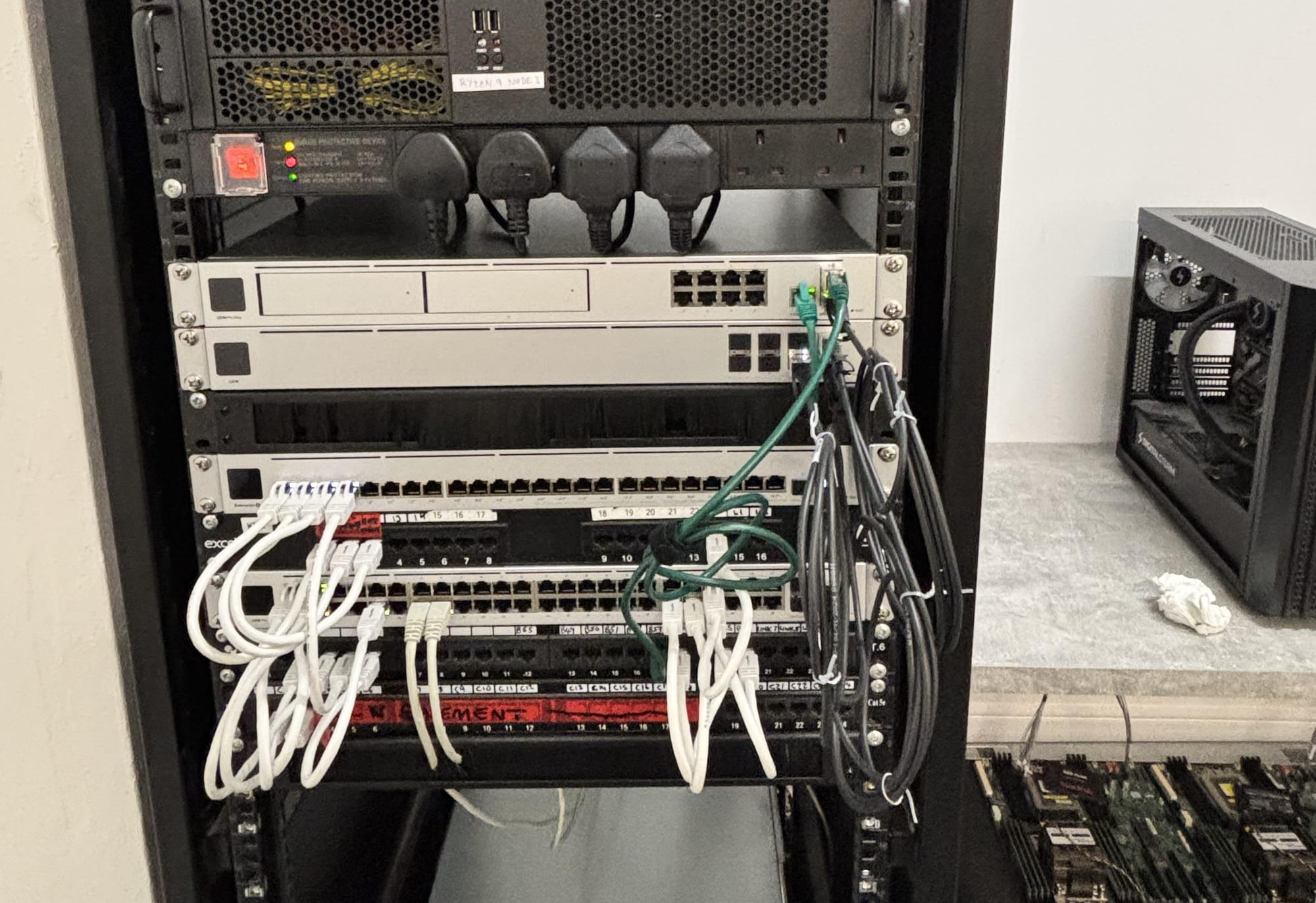

PlayHosting’s hosting network infrastructure has grown alongside the platform itself, evolving from homelab simplicity to something closer to a production-grade setup. Early nodes used a mix of 2.5 Gbps and 10 Gbps connections, routed through Unifi switches. As demand increased, all server nodes were upgraded to 10 Gbps SFP+, prompting the introduction of an Arista 7050ST-54, a datacenter-class switch chosen for its performance and affordability on the secondhand market. External connectivity is split across two lines: a 1 Gbps symmetrical fiber line originally used for streaming and office traffic, and a leased 1 Gbps fiber line with expansion potential up to 12×10 Gbps, reserved for hosting workloads.

At the routing layer, the platform initially relied on a UniFi Dream Machine Pro (UDM Pro), which quickly became a bottleneck. While rated for multi-gigabit throughput, the UDM struggled with traffic inspection and routing under load, often capping out around 500 Mbps in practice. To address this, Tubbo replaced it with a Beelink mini PC running VyOS, a lightweight, enterprise-grade routing OS. The system uses an Intel i7 for improved processing power, paired with native 2.5 Gbps Ethernet and a USB 2.5 Gbps adapter to support dual uplinks. While not an ideal solution long-term, the new router provides significantly more headroom and flexibility than the UDM it replaced.

The Panel

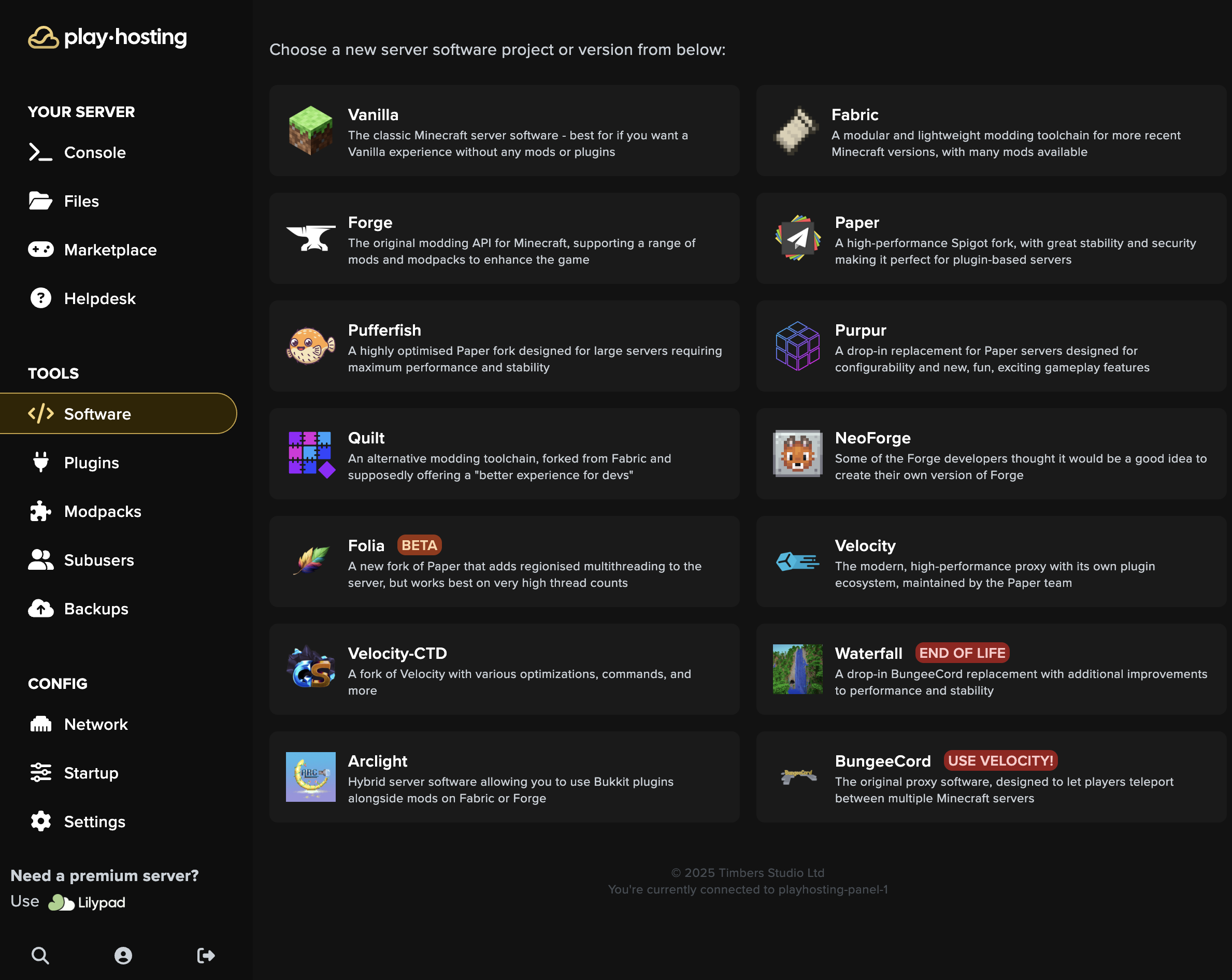

PlayHosting is built around Pterodactyl, an open-source server management panel designed for container-based game hosting. At its core, Pterodactyl consists of two parts: a central web panel that users interact with, and Wings, a lightweight daemon that runs on each host to start, stop, and manage game servers in Docker containers. At PlayHosting, each Wings instance operates in its own virtual machine, isolated for security and resource control.

In PlayHosting’s setup, the main panel runs on Wings-1. What makes this deployment unique is the level of customization applied. Developed in collaboration with the team behind Lilypad, the interface has been reskinned and extended to reflect the PlayHosting brand. Under the hood, the codebase includes additional tools and patches, many of which are proprietary, to support features like advanced server orchestration, dynamic backup handling, and tailored plugin management.

From a user’s perspective, the PlayHosting panel offers a streamlined experience designed to make server management accessible, even for beginners. Built on top of Pterodactyl, the interface has been extended with custom tools similar to premium offerings seen on platforms like BuiltByBit. Upon logging in, users can deploy a Minecraft server with just a few clicks, configure core settings, and choose from curated plugin sets. Server version selection, plugin installation, and server startup are all handled through intuitive menus. Users can bind custom domains, open ports for features like voice chat, and manage files through the panel’s built-in file manager. Much of this functionality is powered by Lilypad’s backend enhancements, giving PlayHosting a polished, cohesive front end that rivals commercial hosts, despite running entirely on free-tier infrastructure.

One of PlayHosting’s most innovative features is the Limbo system, a custom orchestration layer designed to offload unused servers without losing user data. When a server becomes inactive, typically after ten minutes of idle time, it is gracefully shut down, its container is deleted, and the full state is backed up to object storage. This backup is handled via MinIO, running on the Savannah storage server, and follows an S3-compatible format. Originally, Limbo backups were node-specific and could only be restored on the same Wings server. As the system matured, this limitation was removed in favor of a global restore process, allowing suspended servers to be restored on any available node. This greatly improved scheduling flexibility, especially in cases of hardware maintenance or balancing load across the fleet. While Limbo now plays a crucial role in scaling the platform efficiently, it wasn’t without growing pains: early versions introduced restore bottlenecks and coordination bugs that were responsible for some of PlayHosting’s first public outages. Even so, it remains a cornerstone of the service’s resource strategy and one of the clearest examples of how thoughtful automation can stretch homelab infrastructure into production territory.

The Future

From the outside, it’s tempting to offer advice, and plenty has already been given, especially from YouTube comments and Reddit threads. But Tubbo isn’t building PlayHosting alone. A small team of experienced developers, DevOps engineers, and system administrators now contribute to the project, helping guide infrastructure decisions and resolve scaling challenges. Many of the platform’s quirks reflect its origins: a homelab rapidly evolving into a public service. And rather than hide that reality, Tubbo seems to embrace it. He builds publicly, learns out loud, and makes space for experimentation, even when it breaks production. In doing so, PlayHosting becomes more than just a hosting platform: it’s a case study in how far curiosity, community, and technical stubbornness can take you when you're willing to build while everyone is watching. That unpredictability, and the transparency that comes with it, is exactly what makes PlayHosting so much brilliant to follow.