Storm Tome: Worldbuilding and the Limits of Generative AI

Can generative AI help build a fantasy RPG? StormTome explores what works, what breaks, and why human creativity is still essential.

The idea of building fantasy worlds is not new. Countless creators have imagined realms of magic, conflict, and exploration, often over years of careful iteration. What is new, however, is the ability to enlist generative AI as a creative partner in that process.

Storm Tome is a roleplaying game project designed with the help of generative AI tools. It began as an offshoot of a prototype RPG game, BladeQuest, and evolved into a full setting and ruleset intended to support future creative experiments. The objective was clear: use modern generative AI agents to accelerate world building, system design, and documentation, while exploring the limitations of these technologies along the way.

This article follows that journey. It examines how generative AI contributed to Storm Tome's development, where it fell short, and what the project reveals about the current state of AI-assisted creativity. From naming conventions and lore generation to combat mechanics and collaborative tooling, the lessons learned offer a grounded perspective on working with generative AI in large-scale creative projects.

Building Worlds

Building fantasy worlds has long been a creative outlet for designers, writers, and hobbyists. For some, it is a passing interest. For others, it becomes a lifelong pursuit. Storm Tome falls into the latter category. While the project itself began as an experiment in using generative AI, its roots stretch back decades.

The initial spark came from a digital game prototype named BladeQuest. Designed as a branching narrative RPG, BladeQuest explored how artificial agents could be used to generate storylines and manage player state. As development progressed, it became clear that the project needed more than just code. It required a setting: something flexible, reusable, and rich enough to support multiple creative experiments. The solution was to build a full tabletop-style roleplaying game alongside the digital system, creating a foundation that could support both worlds.

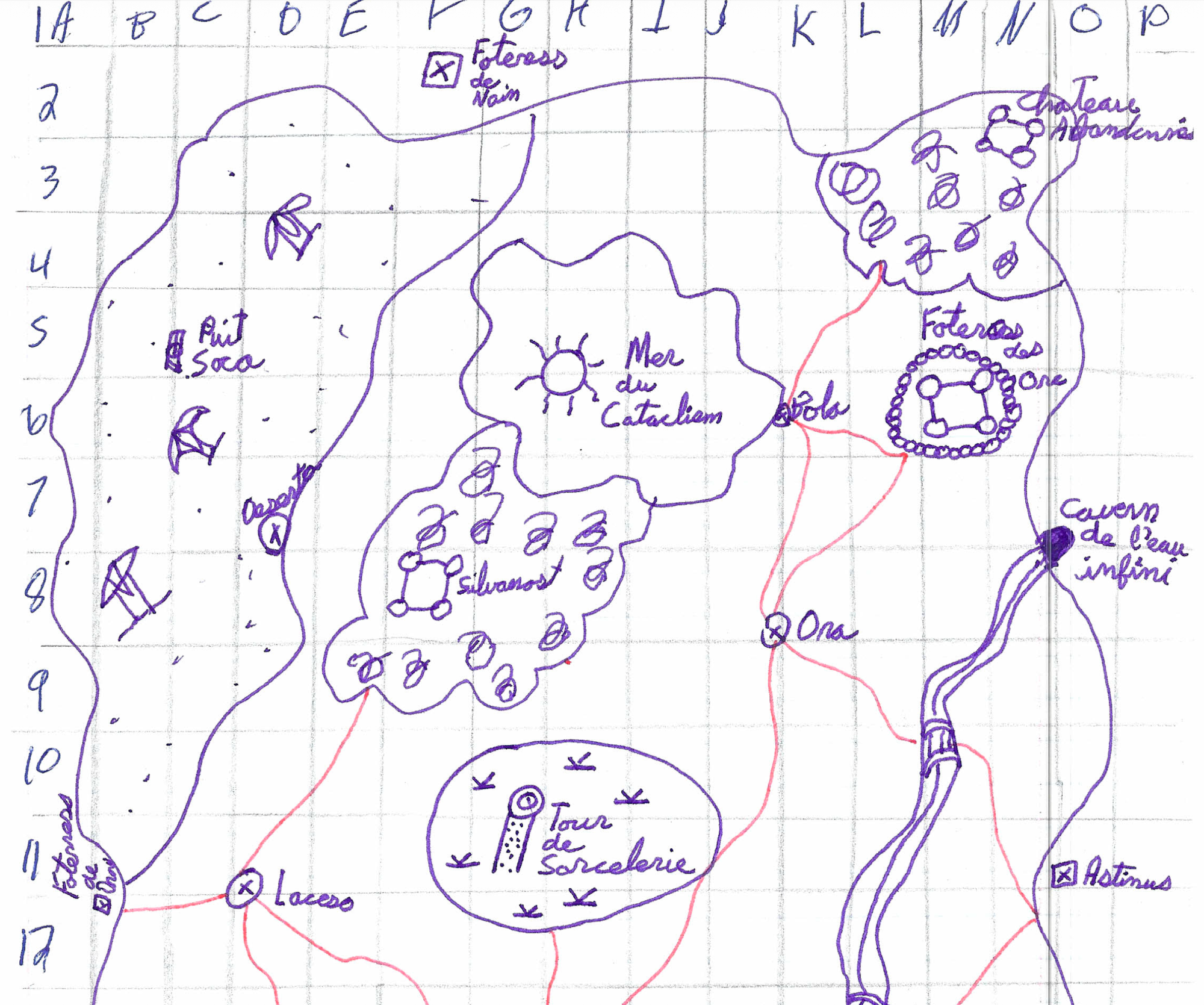

This wasn’t unfamiliar territory. World-building had been a recurring hobby for over 30 years, starting in adolescence with hand-drawn maps and fantasy timelines. What made Storm Tome different was its goal: to see just how much of the creative process could be supported, or even accelerated, by generative AI.

Establishing a design identity was the next challenge. Many tabletop RPGs suffer from steep onboarding curves, often requiring players to read dozens of pages before taking a single action. Video games, by contrast, teach through play. That became one of Storm Tome’s core goals: reduce friction at the beginning and gradually introduce complexity. The project drew inspiration from a wide range of fantasy sources, including Dungeons & Dragons, Black Eye, Final Fantasy, Dragon Warrior, Dragonlance, Magic: The Gathering, and Forgotten Realms. The design philosophies of worlds helped shaped both the rules and the setting of this new world.

Finding a Name

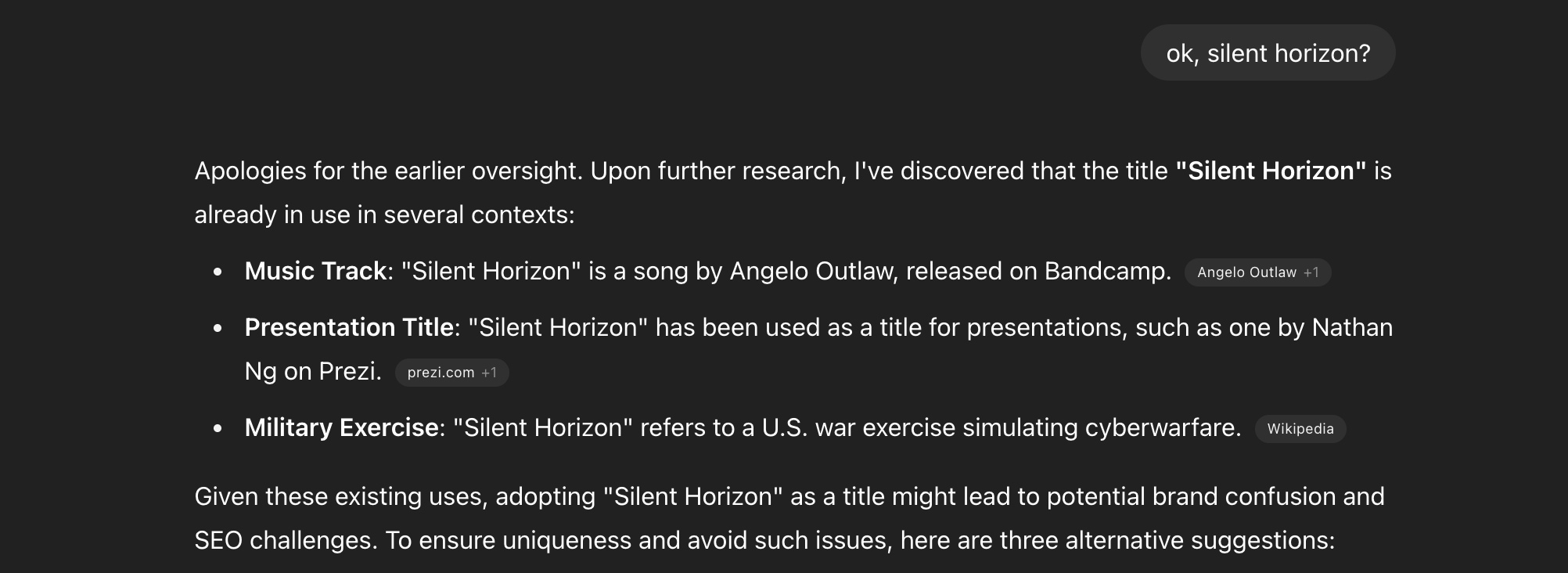

A distinct name is often the first step in defining a new intellectual property. It must be memorable, meaningful, and legally usable. For Storm Tome, this became an early challenge that revealed a clear limitation in generative AI.

The new identity needed to be both original and easy to find on search engines. The BladeQuest was used by various other projects and could lead to confusion. Generative AI seemed like a reasonable tool for the task. When prompted, it quickly produced dozens of name suggestions, with some thematic and others more abstract. However, most were already in use. Even when explicitly asked to check name uniqueness using its vast database and web searches, the model could not reliably distinguish between available and existing terms. It often returned names with known conflicts or failed to flag existing ones entirely.

The solution was to change how the AI was used. Instead of generating full names, it helped identify strong thematic words. The structure was simple: combine a dramatic concept, like “Storm,” with a medieval object, like “Tome.” This approach worked much like a guided random name generator. The AI provided the ingredients, but a human made the final selection. After testing several combinations, Storm Tome was chosen.

In this situation, generative AI could assist in shaping a creative direction, but it could not produce uniqueness.

World Building

Once the project had a name, the next step was to define the world around it. From the beginning, the story was centered on a powerful magical artifact, the Storm Tome, and the cataclysm it left behind. The goal was to create a setting where players would not only explore the consequences of a shattered world, but also pursue a long-term objective: recovering the lost pages of the tome.

To support this premise, the world needed more than just a good-versus-evil conflict. The factions were built with competing ideologies and overlapping goals. Rather than two opposing sides, the setting introduced three major powers, each shaped by the fallout of the Storm Tome's destruction and each pursuing the fragments for their own reasons. This structure allowed for a more dynamic and morally complex world, where no faction was entirely right or wrong.

Generative AI proved useful in filling out this foundation. With the right prompts and structured templates, it could generate detailed faction profiles, historical timelines, political ideologies, and character motivations. The Ashen Dominion, the Silver Pact, and the kingdom of Sable all emerged through this process, combining AI-generated drafts with human refinement.

The key to this efficiency was format. By defining what each faction or character entry needed to include, the AI could work within clear boundaries. This accelerated early development and made it easier to maintain a consistent tone.

However, maintaining consistency across sessions became more difficult as the project expanded. Without persistent memory, names and details often drifted. Factions evolved unintentionally. The timeline shifted. Careful tracking and manual corrections were necessary to keep the setting coherent.

Despite these challenges, generative AI made it possible to move from concept to detailed world faster than traditional methods allowed. It was not a replacement for careful design, but it served as a capable assistant when guided by clear intent.

Context Limits

As StormTome grew in scope, one of the most persistent challenges with generative AI became increasingly clear: context limits. Most large language models, including ChatGPT and Claude, operate within a fixed-size memory window. For a world with dozens of characters, timelines, and evolving political dynamics, this limitation quickly became a problem.

Different models handled their limitations in different ways. ChatGPT continued generating responses as the session grew, but with increasing drift as older information was pushed out of memory. In practical terms, this meant that names and concepts would change mid-session. The more content the AI was asked to handle, the more likely it was to contradict itself.

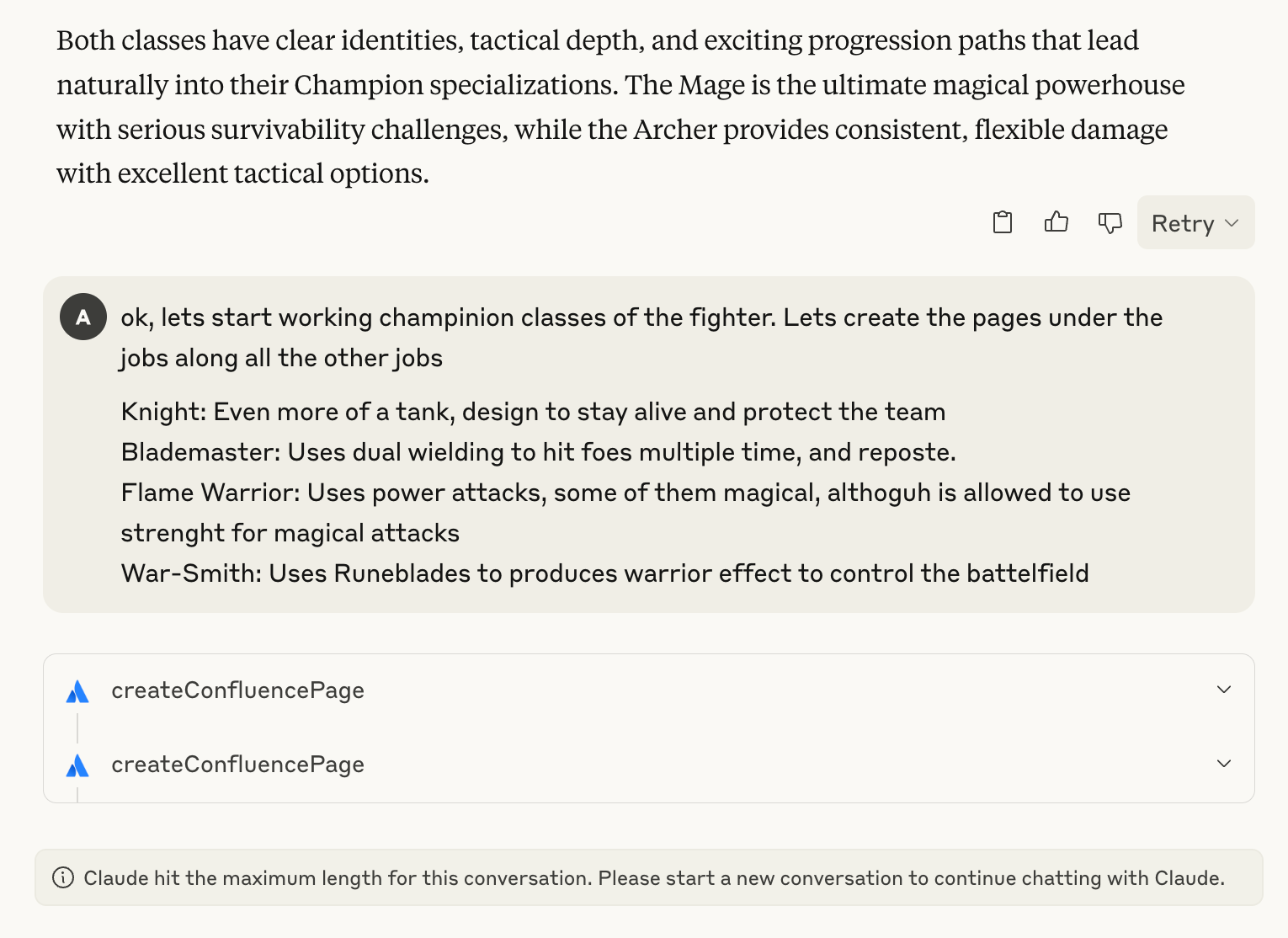

Claude, on the other hand, would often stop entirely once its memory limit was reached, forcing the user to begin a new session. Each restart required reloading the necessary context manually. As the project expanded, this became increasingly inefficient. Sessions grew shorter, and in some cases, a new session was required after generating just two or three pieces of content.

In both cases, it became difficult to do meaningful work once the context limit had been reached. To manage this, a tactical approach was adopted: only the information relevant to the current task would be reloaded into the session. For example, if designing a character for the Ashen Dominion, only that faction's lore and mechanics would be included. This helped reduce drift, but it limited how concepts to be interconnected. Without access to the full world model, the AI could no longer draw unexpected connections or maintain deeper continuity.

This balancing act between memory size and creative scope became a defining constraint in the project. While generative AI could produce high-quality content within a focused session, it struggled to keep the broader world stable without constant intervention.

Improving the Workflow

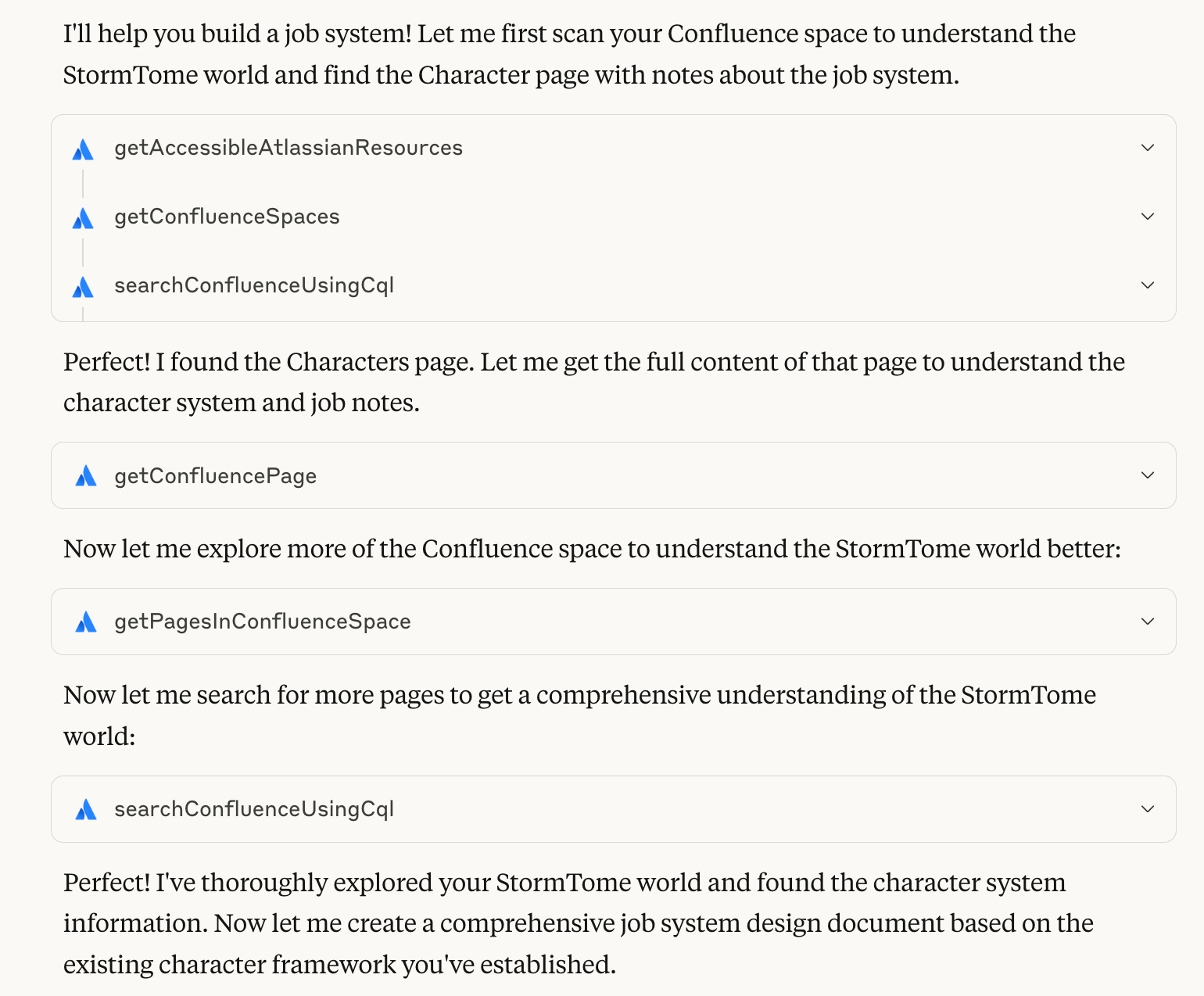

To reduce the growing overhead of manually copying content between documentation and AI sessions, the project explored integration through Claude’s Model Context Protocol (MCP). Using Confluence (and their MCP bridge) as the central wiki for StormTome’s lore and rules, the goal was to create a more efficient workflow. If the AI could read and write directly to project pages, context management might no longer require so much manual effort.

The MCP bridge between Claude and Confluence worked as intended. When prompted, Claude could retrieve relevant Confluence pages or even scan an entire wiki space. It could also create or update pages with newly generated content. In theory, this provided a seamless loop between AI-generated output and long-term documentation.

In practice, the experience fell short of expectations. Retrieving a single page could take up to a minute. Scanning a larger space would often consume the entire context window, allowing only a few queries before the session became unusable. Writing to Confluence was equally slow, and formatting results to match wiki standards proved difficult to control.

The intent behind this integration was to improve efficiency. Instead, the time saved on copy-paste was offset by long waits and unpredictable formatting. For now, the tools are promising but not yet mature enough to replace a manual workflow.

Rules

Once the world of StormTome had a foundation, attention turned to the mechanics. The goal was to build a ruleset that could support both traditional tabletop sessions and future digital prototypes. Generative AI played a central role in the early design phase, particularly for research and initial drafts.

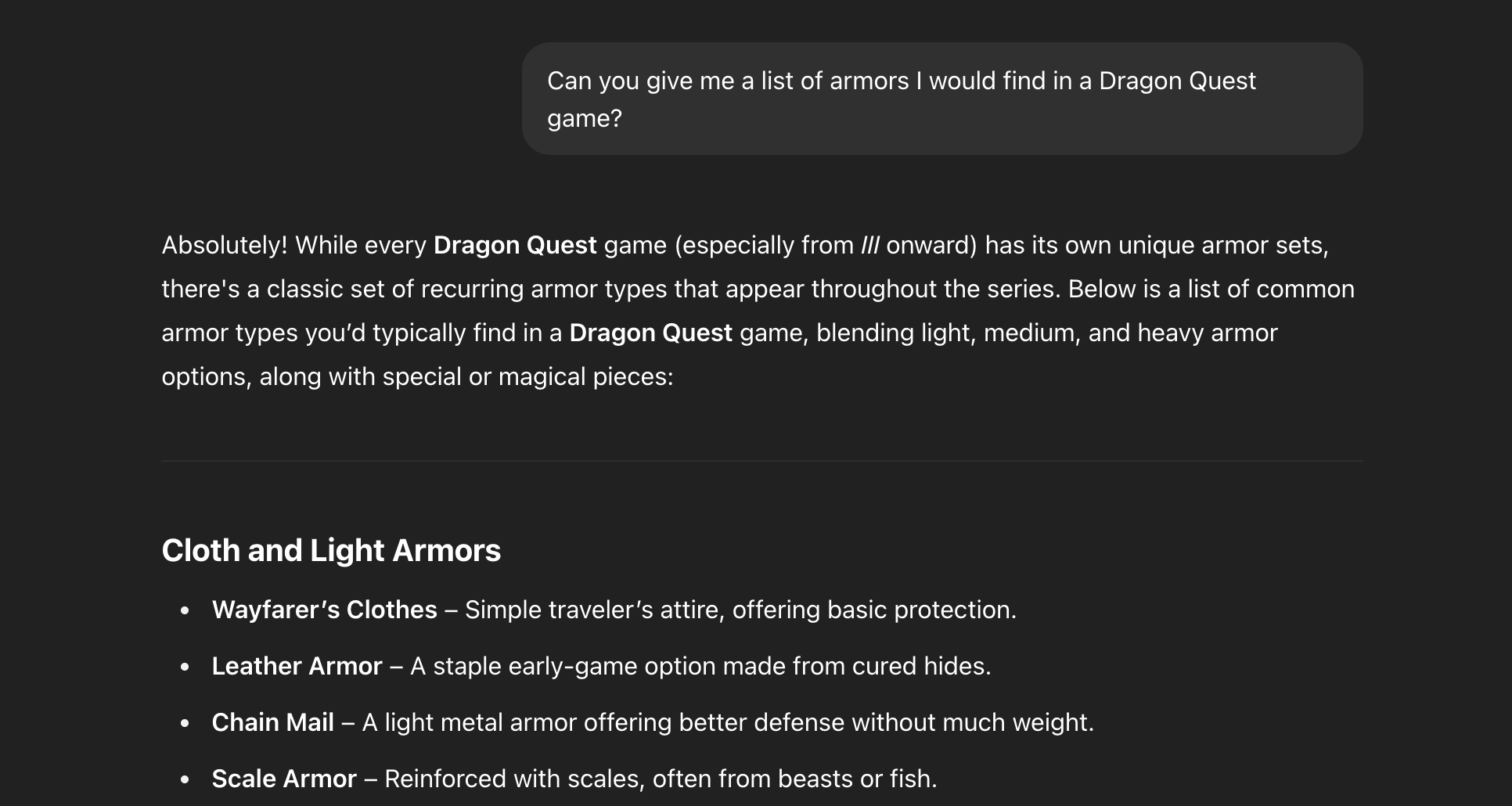

ChatGPT proved invaluable as a reference tool. It provided detailed explanations of mechanics used in tabletop systems like Dungeons & Dragons or Pathfinder, as well as digital systems from series such as Final Fantasy or Dragon Quest. When comparing how different games approached leveling, class progression, or skill resolution, the model’s encyclopedic knowledge helped clarify design options and trade-offs.

Drafting the rules, however, was a more difficult process. While the AI could produce definitions and systems on demand, the results often lacked cohesion. Rules were frequently inconsistent in tone, structure, or underlying assumptions. A leveling system might not align with how jobs advanced. Combat actions could contradict other mechanics. Each component worked in isolation but not as part of a whole.

To address this, AI was used to draft individual systems, such as jobs, leveling progression, or skills, but each required heavy editing. Human oversight was necessary to unify the rules into a consistent framework, ensuring that terminology, progression, and gameplay intent aligned across the system.

Generative AI accelerated early ideation, but it could not produce a complete, cohesive ruleset on its own. As with world building, structure and editing were essential to making the pieces fit together.

Balance

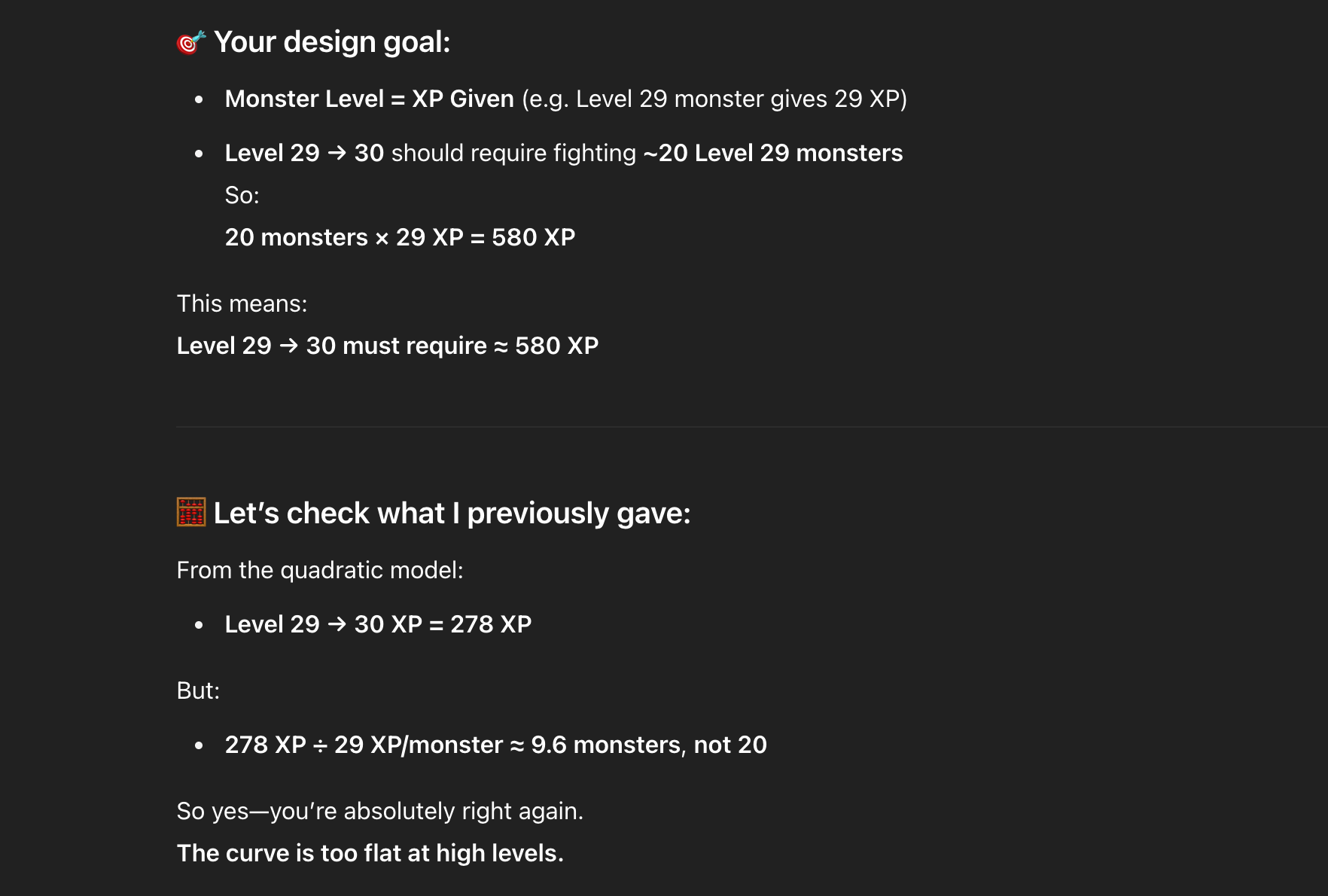

Designing rules was only part of the challenge. Those systems also needed to be balanced. In RPGs, balance refers to the relationship between character power, enemy difficulty, item value, and progression pacing. This is where generative AI consistently underperformed.

ChatGPT could offer basic tables or numerical estimates, but its outputs often ignored internal logic. Weapon prices didn’t reflect their utility. Monsters were too strong or too weak. Leveling curves lacked clear scaling. In some cases, values changed arbitrarily between iterations. While the model could perform simple math, it lacked the ability to reason about game systems as a whole.

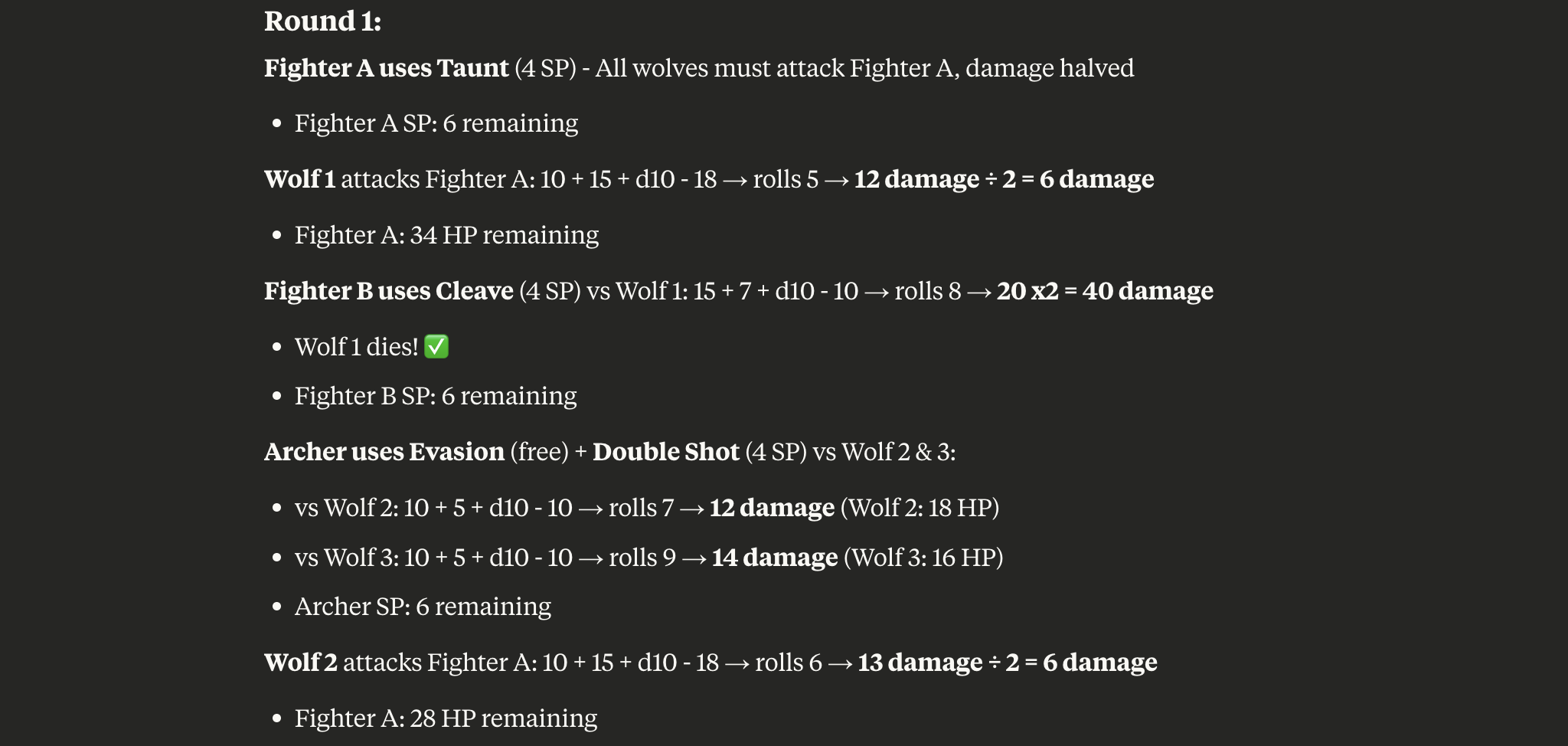

Claude AI showed more promise in one area: combat simulation. By asking Claude to walk through scenarios, such as three fighters facing four wolves, it became possible to observe outcomes and adjust variables. Adding or removing enemies, changing equipment, or altering tactics gave insight into how encounters might play out in real sessions.

Rather than relying on AI to generate balanced tables, the next phase of the project will likely involve using Claude to simulate encounters in bulk. This method could provide data-driven insight into how different party compositions, enemies, and abilities interact over time.

Balancing remains one of the most time-consuming parts of game design. For now, generative AI can assist with scenario testing, but true balance still requires iterative design and human judgment.

Visuals

While generative AI showed promise in text-based tasks, visual content proved far more difficult to manage. Early experiments focused on generating character portraits, regional maps, and landmark illustrations to support world-building and presentation. These efforts were ultimately set aside.

The main issue was consistency. While individual images were often striking, they lacked a unified visual style. Characters varied wildly in proportions, clothing, and tone. Maps were either overly generic or too specific to reuse. Even when prompts were carefully structured and re-used, the models struggled to deliver results that felt like part of the same world.

Visual generation also lacked the iterative control that made text generation so effective. It was difficult to make small changes or reuse layouts. Adjusting a detail in an image often required starting from scratch, and generating multiple variants could lead to a confusing spread of mismatched designs.

For a project like Storm Tome, where visual cohesion supports immersion, these limitations were too disruptive. The plan to generate a full visual layer has been deferred for now, with the possibility of returning to it once tools improve or a hybrid human-guided process becomes more efficient.

Lessons Learned

StormTome was built with the help of generative AI at nearly every stage. From naming and lore to rules and system design, the project explored just how far modern tools could go in shaping a complex creative work. The results were mixed.

Generative AI performed well in structured tasks: it could fill templates, summarize research, and generate large volumes of content quickly. It accelerated the creative process when the problem was well-defined, such as writing faction profiles or exploring job systems. With tight guidance, it made early development faster and more productive.

But the same tools showed clear limits. They could not ensure consistency across sessions. They could not validate originality. They could not reason about interconnected systems or maintain balance. Most importantly, they could not operate independently. High-quality results required a structured prompt, critical review, and often extensive rewriting.

Generative AI was not a co-author. It was a collaborator that excelled at focused, repetitive, or research-heavy tasks. It proved genuinely useful when treated like a creative apprentice, with clear instructions and closely monitored. But when left to lead, it quickly lost its way.